132. Analysing AI: Fast, Gullible, and Lazy

I've been studying AI for about a year. It has marked weaknesses in areas that might make it unsuitable for replacing humans in the workplace.

Over the last year I’ve been asking AI questions and taking notes. I ask it about people, science, research, world events, and I notice when and how it makes errors. This morning I asked it about myself. I was very concerned about the result. Not because I necessarily disagreed with its assessment, but there are serious problems with how it gathers data, and how gullible it is. It just believes anything it reads, unless there is a contradicting source.

Fast: AI is at least thousands of times faster than a human in the areas it is good at. Perhaps millions of times faster. It also has the ability to search a wide array of sources, some of which pre-AI google might have missed. It’s picking up my papers on related searches even if I just published them yesterday. However when I asked if Greta Thunberg was okay, it said she was just fine and didn’t mention that she had been kidnapped.

Gullible: AI seems to believe anything it reads, unless the information is contradicted somewhere else. When I asked it about Ramin Shokrizade (me) it pulled 17 sources out of the hundreds available. 17 isn’t great but it’s okay. But then it didn’t use those 17 sources, it primarily relied on the most information-dense source, which was of course my LinkedIn profile.

I could be 100% lying about everything on my LinkedIn profile. There are enough secondary sources to prove almost everything on my long profile, but it didn’t bother to check those sources. That’s one of the reasons I write so much, to demonstrate that I actually have the knowledge I claim I have. A catfish could just copy my profile (I discuss this in more detail below) and AI might believe that other person has all those abilities.

When I did the AI check on the EA EOMM research, it was able to detect at least 3 problems:

Conflict of interest

No human subjects evaluated

Unethical Practices

That was a bit impressive. Not because these things would not be obvious even to a layperson on a careful reading, but that it could almost instantly figure these things out without the two plus hours it would take someone to carefully read the study. Since humans increasingly can’t be bothered to read, and certainly not anything of that length (a dense 6 pages), this is better than nothing.

But then it missed some glaring cherry picking issues. These are the first sorts of errors/fraud that a peer reviewer will look for. And they were not hard to find. But the AI just assumed everything was okay with what was written.

As you can see, AI is still completely dependent on the information that humans provide it. It’s not good at all at figuring things out on its own. It doesn’t even seem to try.

Lazy: The first page of the AI review of myself was filled with all sorts of flowery accolades. If I had an ego, I’d be very happy about that. But instead I was quite alarmed. The reason was because it was taking all that information from me, from what I wrote on my LI profile. What’s written on a LI profile by the person who owns that account could be 100% propaganda. Nothing on it should be trusted unless it can be backed up by trusted 3rd party sources.

In my case those are available, and some were on that list of 17 sources it did claim to consider. But it didn’t cite those sources in the printout. This is worse than useless. This means AI can be propagating misinformation and misleading the people who use it.

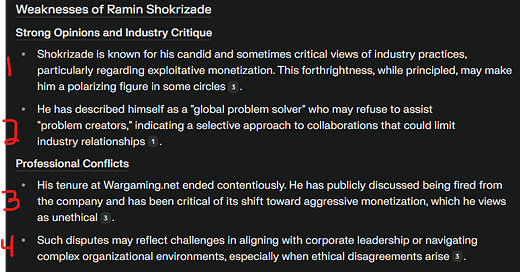

It also made these claims about me when I asked it if I had been involved in any controversies:

That’s fair analysis. I’m not a yes man. Companies are filled with yes people. You don’t need me for that. Diversity of thought is only “polarizing” if there is only one pole in your company. This is the “monoculture” that poisons most AAA companies and leads to toxic positivity. But lack of that diversity robs teams of productivity. Google’s expensive Project Aristotle proved that definitively.

This is interesting because this is ripped from an older version of my LI profile. Ramin in 2025 is quite willing to help problem creators as long as they want to solve those problems. This smacks of cherry picking on the part of AI. If it’s only going to use me as a source, it should not misquote me. Directly from my current LI profile:

Almost all of what AI wrote here is verifiably untrue, from sources it claimed to have access to. Technically I was “laid off for lack of work”, and I discussed this for the first time in my interview with Josh Bycer. The real reason, which I also discussed there, was that a catfish with zero game industry experience was hired by his drinking buddy (who happened to be the CEO, Victor) and proceeded to copy my LI profile 100% (like a scene from SWF). I reported this dangerous obsession to my boss Chris Keeling. That obsession escalated to the catfish calling me into a dark room and telling me in 2015 that all my future work product would go to the catfish and he would take credit for all of it. This would allow him to be me, and would allow me to keep my job. When I disagreed he then proceeded to get founders who had never worked with me to “lay me off”. I don’t think this counts as “contentious”. Normally we call that extortion. My relationship with the rest of the company was quite positive and they even paid to fly me out to speak at their conferences after I left the company. Also, the aggressive monetisation that I complained about didn’t start until 2021, six years after I left. Leaving that date out paints a very different picture of the situation. That had nothing to do with my leaving Wargaming, we never had any disagreements over ethics.

Okay wow. The founder I worked directly under in Belarus and Russia, Sergey Burkatovskiy, was very tough on me in my Minsk hiring interview. That was the same secretive meeting where Chris Taylor gave up Gas Powered Games to Wargaming. But once he realized what I could do, he would surrender his own home to me any time I was deployed to Minsk or Saint Petersburg. He always showered me with praise and made me feel safe. Granted it can be very hard for anyone to navigate complex organisational environments. You might say the CEO of Wargaming had some difficulties with this (from the Wargaming wiki):

Working for Wargaming during the Crimean War, and being required to travel to studios on multiple continents, felt like living in a James Bond movie. The levels of intrigue were extreme, and the situation was literally at times dangerous. I was highly respected inside the company because I was able to successfully navigate these complexities. I think by leaving out this context, AI manages to really come up with some very inaccurate conclusions.

I may not have always agreed with Victor, but I know that he is very loyal to Russia and doesn’t deserve to be under threat of assassination as some sort of terrorist. The same can be said of all my friends and colleagues at the Lesta studio in Saint Petersburg. I always have nothing but nice things to say about them. While I’m not particularly religious, I pray for their safety.

The Current State of AI in 2025

AI in its current state is a powerful tool in the hands of those who understand its strengths and weaknesses. As I discussed in my last paper on Moneyball 2.0, AI can be used to replace a lot of employees, but only under very specific conditions. Because it can boost the productivity of technical (non manual labor) adders, you need less adders. But, you still need some adders to give AI instructions, and to check AI’s work output.

In addition, you should have an AI liaison attached to adder groups to boost the effectiveness of AI inputs/outputs. That could mean you actually have to hire more people than you had before. Thus AI can help you cull redundancies. As you should only rarely ever have redundant multipliers, you can’t replace a multiplier with AI. Maybe in the future, but not in 2025.

For everyone else, you really should take a look at the sources of any AI output. Are those sources independent and reliable? Were they even used in the report? Are there any sources that contradicted the report but that were mysteriously ignored?

AI doesn’t tell you the truth, it tells you what it thinks you want to hear. The more it learns from humans what humans want, it’s only a matter of time before AI can end up being another “toxic positivity” employee that always agrees and never disagrees.

When I point out to AI that it’s making errors (usually because it’s telling me what it thinks I want to hear), it never responds/corrects. Maybe I’m not talking to it properly, but it seems like AI is fast when it is “on the rails” like a train, and if it ever goes off those rails, it 100% crashes.

I keep challenging AI to see what its limitations are. I expect those to change rapidly over time. If I notice a significant milestone change, I will report on that.